I suddenly became very interested in Twitter bots because of @FFD8FFDB. That interview led me to Beau Gunderson, an experienced bot-maker and general creator of both computer things and people things. In answer to email questions, he was more voluble than I expected — in a delightful way! — so this is a long one. I’m sure neither of us will be offended if you don’t have time to read it all now. I posted the Medium version first so you can save it for later using Instapaper or something equivalent!

Note: I did not edit Beau’s answers at all. He refers to most people — and bots — by Twitter username, which I think is very reasonable. It’s good to present people as they have presented themselves!

Sonya: How did you get into generative art? Why does it appeal to you, personally?

Beau: my first experience with generative art was LogoWriter in first or second grade. i don’t remember if it was a part of the curriculum or not but i spent a lot of time with it, figuring out the language and what it could be made to do. i feel like there was some randomness involved in that process because i didn’t have a full understanding of the language and so i would permute commands and values to see what would happen. i gave some of the sequences of commands that drew recognizable patterns names.

in terms of getting into twitter bots i’m certain that some of the first bots i came across were by thricedotted and were of the type that thrice has described as “automating jokes”; things like @portmanteau_bot and @badjokebot (which are both amazing). the first bot i made was @vaporfave, which i still consider unfinished but which is also still happily creating scenes in “vaporwave style”, really just a collection of things that i associated with the musical genre of vaporwave (which i do actually enjoy and listen to). it has made more than 10,000 of these little scenes.

a lot of the bots i had seen were text bots, and so i became very interested in making image bots as a way to do something different within the medium. i gave a talk at @tinysubversions’ bot summit 2014 about transformation bots (though mostly about image transformation bots): http://opentranscripts.org/transcript/image-bots/ and my next bot was @plzrevisit, which was a kind of “glitch as a service” bot that relied on revisit.link.

as far as why generative art appeals to me, i think there are a few main reasons. i like the technical challenge of attempting to create a process that generates many instances of art. it would be one thing to programmatically create one or a hundred scenes for vaporwave, or to generate 10,000 and then pick the 10 best and call it done. but it feels like a different challenge to get to the point where i’m satisfied enough with the output of every run to give the bot the autonomy to publish them all. i also like to be surprised by them. and they feel like the right size for a lot of my ideas… they’re easy enough to knock out in a day if they’re simple enough. this is probably why i also haven’t gone back to a lot of the bots and improved them… they feel unfinished but “finished enough”.

in thinking about it some of the appeal is probably informed by my ADHD as well. i prefer smaller projects because they’re more manageable (and thus completable), and twitter bots provide a nearly infinitely scrolling feed of new art (and thus dopamine).

Sonya: How do you conceptualize your Twitter bots — are they projects, creatures, programs, or… ?

Beau: well, they’re certainly projects (i think of everything i do as a project; all my code lives in ~/p/ on my systems, where p stands for projects)

but the twitter bots i think of as something more… they don’t have intelligence but they are often surprising:

aside from tweeting “woah” at the bots i often will reply or quote and add my own commentary:

even though i know they don’t get anything from the exchange i still treat them as part of a conversation sometimes. they’re creators but i don’t put them on the same level as human creators.

Sonya: As a person who has created art projects that seem as though they are intelligent — I’m thinking of Autocomplete Rap — what are your thoughts on artificial intelligence? Do you think it will take the shape we’ve been expecting?

Beau: autocomplete rap was by @deathmtn, i’m only mentioned in the bio because he made use of the rap lyrics that i parsed from OHHLA and used in my bot @theseraps. but i think @theseraps does seem intelligent sometimes too. it pairs a line from a news source with a line from a hip-hop song and tries to ensure that they rhyme. when the subjects of both lines appear to match it feels like the bot might know what it’s doing.

my thoughts on artificial intelligence are fairly skeptical and i’m also not an expert in the field. i’ll say i don’t think it represents a threat to humanity. i don’t think of my work as relating to AI, it’s more about intelligence that only appears serendipitously.

Sonya: Imagine a scenario where Twitter consists of more bots than humans. Would you still participate?

Beau: yes. i talk to my own bots (and other bots) as it is. @godtributes sometimes responds to tweets with awful deities (like “MANSPLAINING FOR THE MANSPLAINING THRONE”) and i let it know that it messed up (wow i think i’ve tweeted at @godtributes more than any other bot).

i also have an idea i’d like to build that i’ve been thinking of as “bot streams” — basically bot-only twitter with less functionality and better support for posting images. and with a focus on bots using other bots work as input, or responding to it or critiquing it (an idea i believe @alicemazzy has written about).

Sonya: How does power play into generative art? When you give a computer program the ability to express itself — or at least to give that impression — what does it mean?

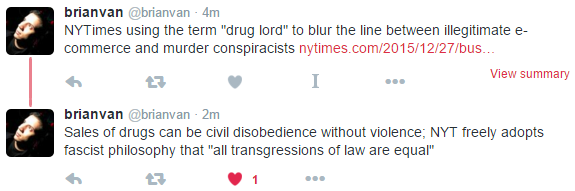

Beau: i try to be very aware of the power even my silly bots have. @theseraps uses lines from the news, which can contain violence, and the lines from the hip-hop corpus which can also contain violence. when paired they can be very poignant but it’s not something i want to create or make people look at. there are libraries to filter out potentially problematic words so i use one of those and also do some custom filtering.

this is one aspect of the #botALLY community i really like; there’s an explicit code of conduct and there’s general consensus about what comprises ethical or unethical behavior by bots. @tinysubversions has even done work to automate detecting transphobic jokes so that his bots don’t accidentally make them.

i wrote a bot called @___said that juxtaposes quotes from women with quotes from men from news stories as a foray into how bots can participate in a social justice context. just seeing what quotes are used makes me think about how sources are treated differently because of their gender. while i was making the bot i also saw how many fewer women than men were quoted (which prompted an idea for a second bot that would tweet daily statistics about the genders of quotes from major news outlets)

i think @swayandsea’s @swayandocean bot is very powerful — the bot reminds its followers to drink more water, take their meds, take a break, etc.

i also really like @lichlike’s @genderpronoun and @RestroomGender, bots that remind us to think outside of the gender binary.

there’s another aspect of power i think about, which brings me back to LogoWriter. LOGO was a fantastic introduction for me as a young person to the idea of programming; it gave me power over the computer. the idea of @lindenmoji was to bring that kind of drawing language and power to twitter, though the language the bot interprets is still much too hard to learn (i don’t think anyone but me has tweeted a from-scratch “program” to it yet)

your last question, about what it means to give a computer the ability to express itself… i don’t quite think of it that way. i’m giving the computer the ability to express a parameter set that i’ve laid out for it that includes a ton of randomness. it’s not entirely expressing me (@theseraps has tweeted things that i deleted because they were “too real”, for example), but it’s not expressing itself either. i wasn’t smart enough, or thorough enough, or didn’t spend the time to filter out every possible bad concept from the bot when i created the parameter space, and i also didn’t read every line in the hip-hop lyrics corpus. so some of the parameter space is unknown to me because i am too dumb and/or lazy… but that’s also where some of the surprise and serendipity comes from.

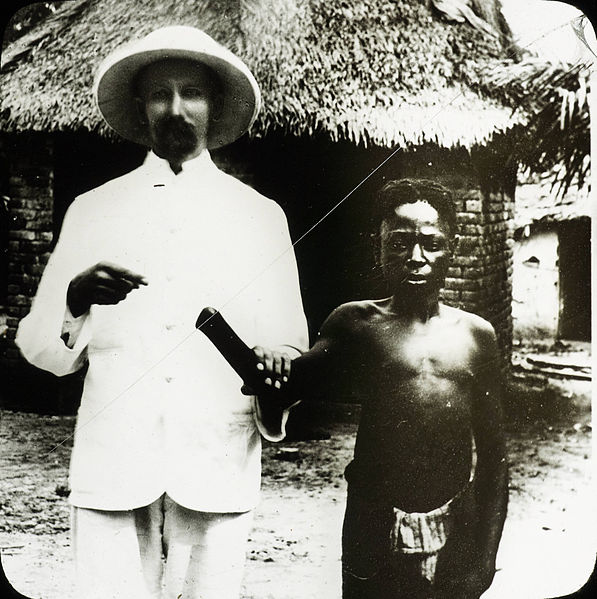

i think as creators of algorithms we need to think about them as human creations and be aware that human assumptions are baked in:

p.s.: based on the content of the newsletter so far i feel like @alicemazzy, @aparrish, @katierosepipkin, @thricedotted, and @lichlike would be great for you to talk to about bots or art or language or just in general 🙂