“Humans are actually extremely good at certain types of data processing. Especially when there are only few data points available. Computers fail with proper decision making when they lack data. Humans often actually don’t.” — Martin Weigert on his blog Meshed Society

Weigert is referring to intuition. In a metaphorical way, human minds function like unsupervised machine learning algorithms. We absorb data — experiences and anecdotes — and we spit out predictions and decisions. We define the problem space based on the inputs we encounter and define the set of acceptable answers based on the reactions we get from the world.

There’s no guarantee of accuracy, or even of usefulness. It’s just a system of inputs and outputs that bounce against the given parameters. And it’s always in flux — we iterate toward a moving reward state, eager to feel sated in a way that a computer could never understand. In a way that we can never actually achieve. (What is this “contentment” you speak of?)

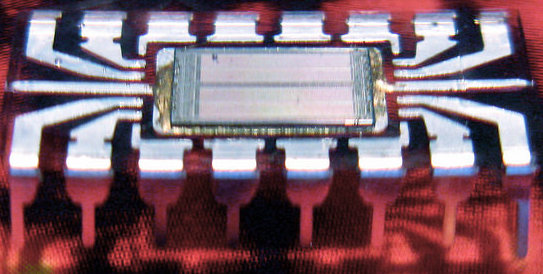

Photo by Steve Jurvetson.

Kate Losse wrote in reference to the whole Facebook “Trending Topics” debacle:

“no choice a human or business makes when constructing an algorithm is in fact ‘neutral,’ it is simply what that human or business finds to be most valuable to them.”

That’s the reward state. Have you generated a result that is judged to be valuable? Have a dopamine hit. Have some money. Have all the accoutrements of capitalist success. Have a wife and a car and two-point-five kids and keep absorbing data and keep spitting back opinions and actions. If you deviate from the norms that we’ve collectively evolved to prize, then your dopamine machine will be disabled.

It’s only a matter of time until we make this relationship more explicit, right? Your job regulating the production of foozles and whizgigs will require brain stem and cortical access. You can be zapped with fear or drowned in pleasure whenever it suits the suits.

Comments are closed.